Introduction

This project was born as an improvement on the AI David Mikulic and I made during our last game project during our education at FutureGames, with the goal of creating an AI system for a stealth game that can remember more than one thing, and act on those memories according to what’s most important at the moment, trying to make it seem smarter than the average NPC guard :).

I also created a customizable waypoints system to allow for easy setup of patrol paths in editor!

All the code for it has been written in C++, exposing functionality to the editor through custom DataAssets and Blueprint APIs.

Fuzzy Brain basics

The fulcrum of this AI is UFuzzyBrainComponent, an ActorComponent that can manage an array of FWeightedSignals and passes the currently most interesting one to the Blackboard of a Behavior Tree (from now on BT). These signals are, in this example, generated by two senses, Hearing and Sight, but it’d be trivial to add further signal generators without having to modify the FuzzyBrain itself.

Once a WeightedSignal signal is received, it gets added to the array that represents the AI’s memory. Every tick, the memory is evaluated and the currently most interesting signal gets written to the Blackboard, so the BT can act on it.

Every tick, all signals in memory decay by

The fulcrum of this AI is UFuzzyBrainComponent, an ActorComponent that can manage an array of FWeightedSignals and passes the currently most interesting one to the Blackboard of a Behavior Tree (from now on BT). These signals are, in this example, generated by two senses, Hearing and Sight, but it’d be trivial to add further signal generators without having to modify the FuzzyBrain itself.

Once a WeightedSignal signal is received, it gets added to the array that represents the AI’s memory. Every tick, the memory is evaluated and the currently most interesting signal gets written to the Blackboard, so the BT can act on it.

Every tick, all signals in memory decay by PrejudiceDecay, which is tweakable in editor, to make the AI give less importance to older signals.

Signal Severity and priority

To allow a Behavior Tree to drive the AI’s behavior based on the Fuzzy Brain’s signals, a customizable set of Signal Severities has been defined. This allows to read the continuous weight of the most interesting signal as a more BT-friendly discrete value.

Severity thresholds

The severity thresholds can be defined by the designer in-editor in any UFuzzyBrainComponent as shown here. Those thresholds can then be used as conditional decorators to allow the BT to easily switch between behaviors according to the current severity.

Signal priority

The big advantage of this Fuzzy Brain-driven AI is that it can store multiple signals in memory, while always focusing on the one with highest priority. In this example, the AI is storing the noise signals from the distractions the player is throwing past it, but it's still acting on the much higher weight vision signal that it's getting from detecting the player in its narrow vision cone (see sight section below!)

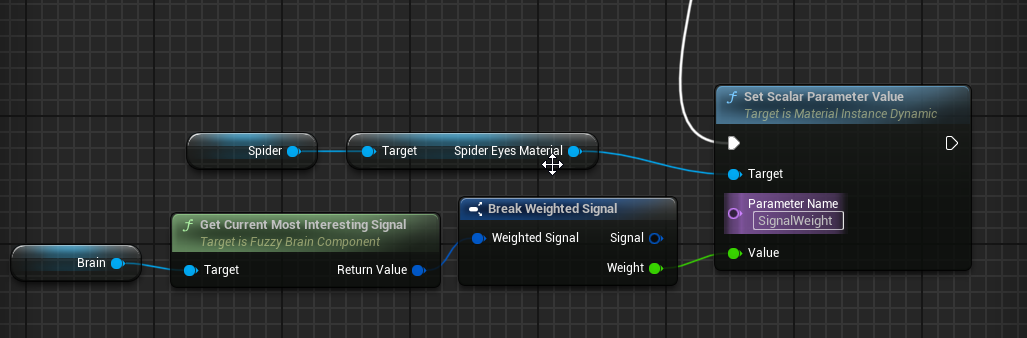

Using the raw signal weight

While using severity thresholds ideal in most BT-related applications, the float signal weight of the current most interesting signal is available from UFuzzyBrainComponent's BP API, defined in C++ code, and it can be used, for example, for visual effects like this (very programmer-arty :D) glow on the spider's eyes that increases as the spider receives stronger and stronger signals, in this case from seeing the player. (Movement has been intentionally stopped while recording this GIF for clarity.)

Hearing

UHearingComponent is one of the two custom senses made for this project, opting to build a solution from scratch rather than relying on UE’s already existing sensing system to guarantee maximum customizability and full integration with the FuzzyBrain while keeping the code simple and lightweight.

UHearingComponent is one of the two custom senses made for this project, opting to build a solution from scratch rather than relying on UE’s already existing sensing system to guarantee maximum customizability and full integration with the FuzzyBrain while keeping the code simple and lightweight.

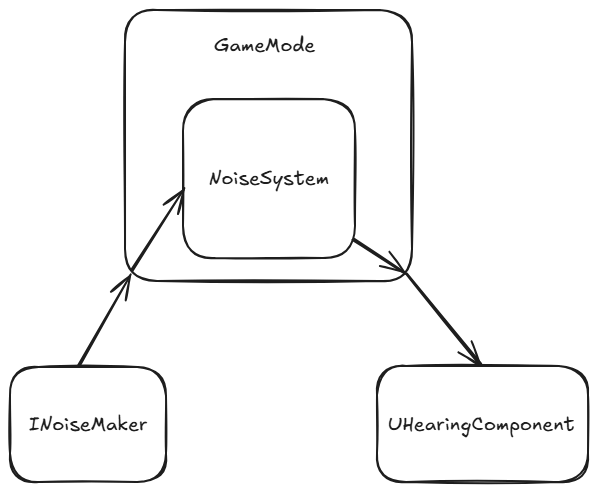

Code architecture

I tried to keep noise makers and hearing components as decoupled as possible. The GameMode creates a singleton of UNoiseSystem, and that will be the only thing everything hearing-related will talk to.

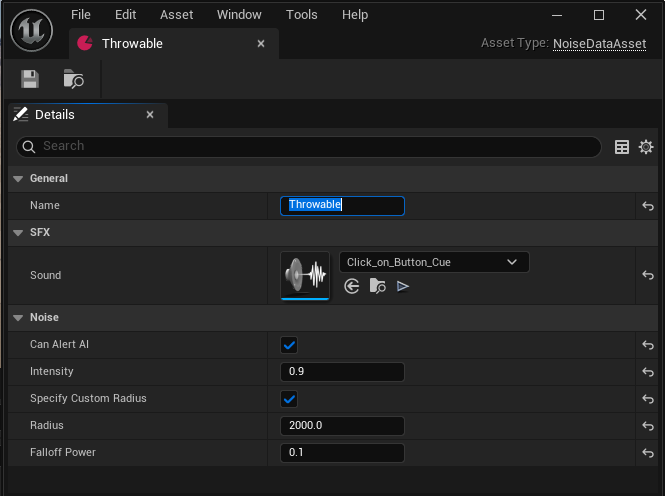

Noise Events

To keep everything as designer-friendly as possible, everything that generates noise is defined by a Noise Data Asset. This allows in-engine tweaking of the sound's radius (represented by the purple wireframe in the GIFs), intensity, distance-based falloff and much more. A sound effect can also be passed in, and it'll be played by UNoiseSystem as a 3D SFX at the correct location.

Sight

USightComponent is one of the two custom senses made for this project, opting to build a solution from scratch rather than relying on UE’s already existing sensing system to guarantee maximum customizability and full integration with the FuzzyBrain while keeping the code simple and lightweight.

USightComponent is one of the two custom senses made for this project, opting to build a solution from scratch rather than relying on UE’s already existing sensing system to guarantee maximum customizability and full integration with the FuzzyBrain while keeping the code simple and lightweight.

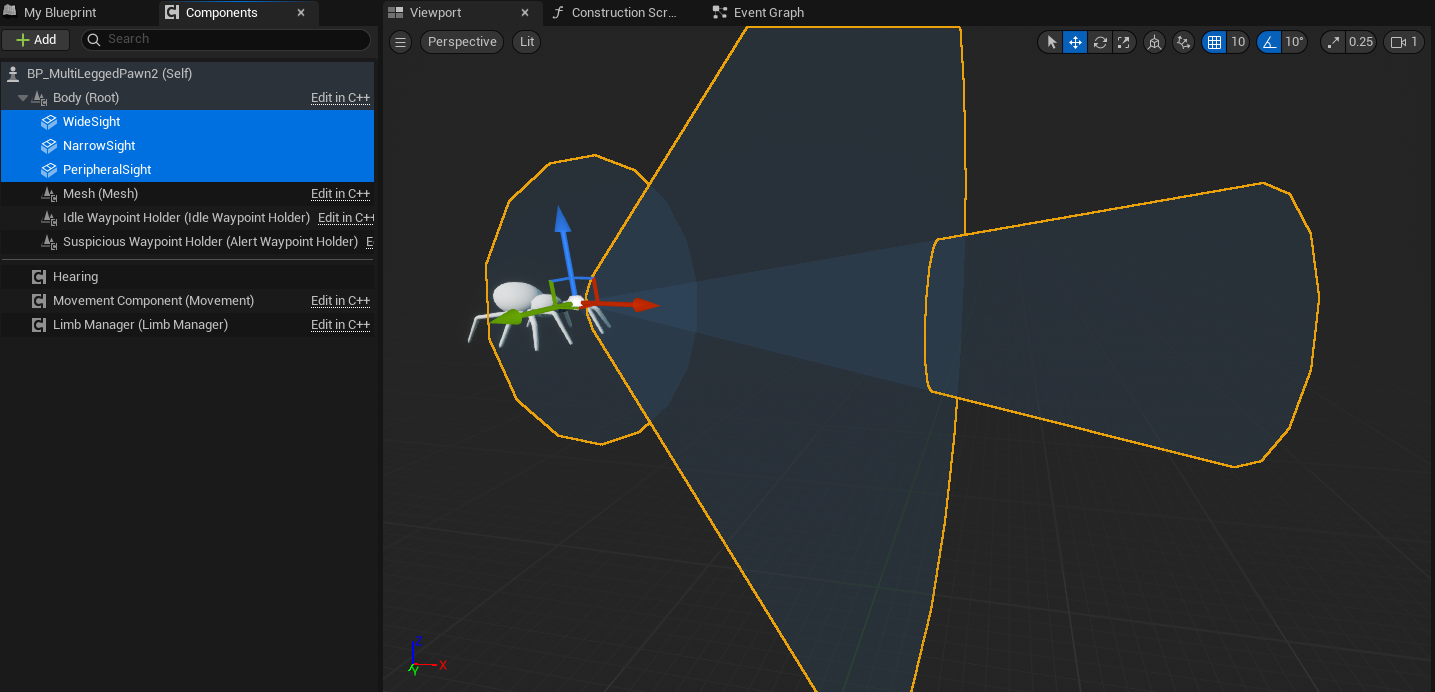

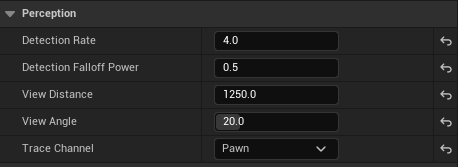

Sight component setup

The BP for the AI pawn can have any amount of USightComponents. Each component can be tweaked through the parameters shown below, creating multiple vision cones with different sensitivities. In this example, the narrow vision cone detects things much quicker than the wider ones!

Waypoint system

The AI, when the brain doesn’t provide any signals interesting enough to investigate, will default to a patrolling behavior. To handle it in a designer-friendly way, I implemented a waypoint system that is fully controllable in-editor.

Waypoint handling in editor

By adding any amount of UWaypointHolderComponents to any actor BP, the menu shown in the GIF will appear in every instance of that BP in a level. It allows the designer to set a number of waypoints for each UWaypointHolderComponent and a debug color. Once drawn, each waypoint will appear as a SceneComponent in the BP instance, allowing the designer to move around their transforms in-engine, allowing them to quickly modify the patrol path for the AI without having to touch any code or even the BT.

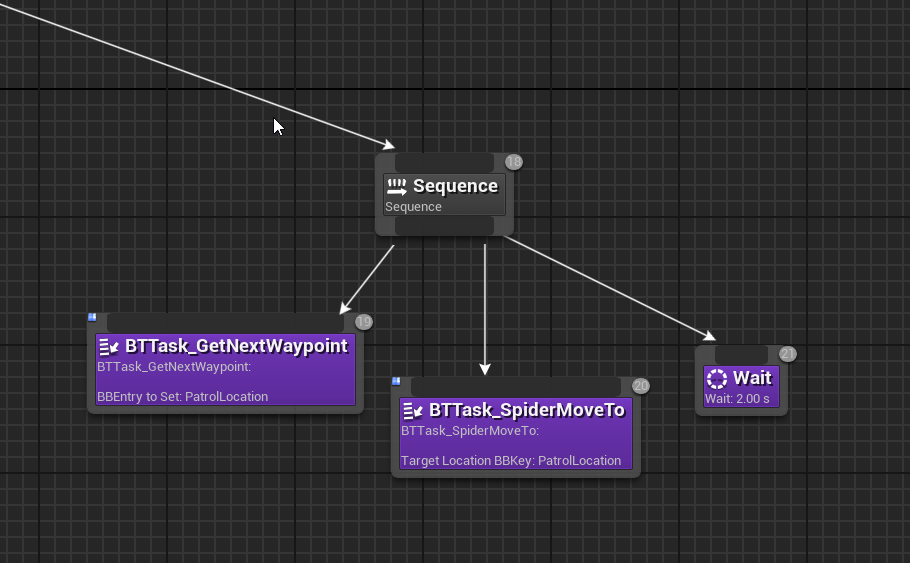

In case there is more than one set of waypoints, the API will return the correct one based on the brain's current suspicion level. This makes a BT sequence like the one shown work for multiple suspicion levels, allowing for instance to have different patrol paths for different alertness states!

In-game behavior

UWaypointHolderComponent exposes a BP API that allows transparent fetching of the next endpoint, in this case by a BT task.

Credits and Links

Special thanks to David Mikulic for the procedurally animated spider the AI is driving, and Rae Zeviar for the amazing victorian mansion modular kit I used for the first clip on this page! Check their out portfolios :)

Take a peek at the code on the GitHub repo :)

Written by Jo Colomban

← Back to blog